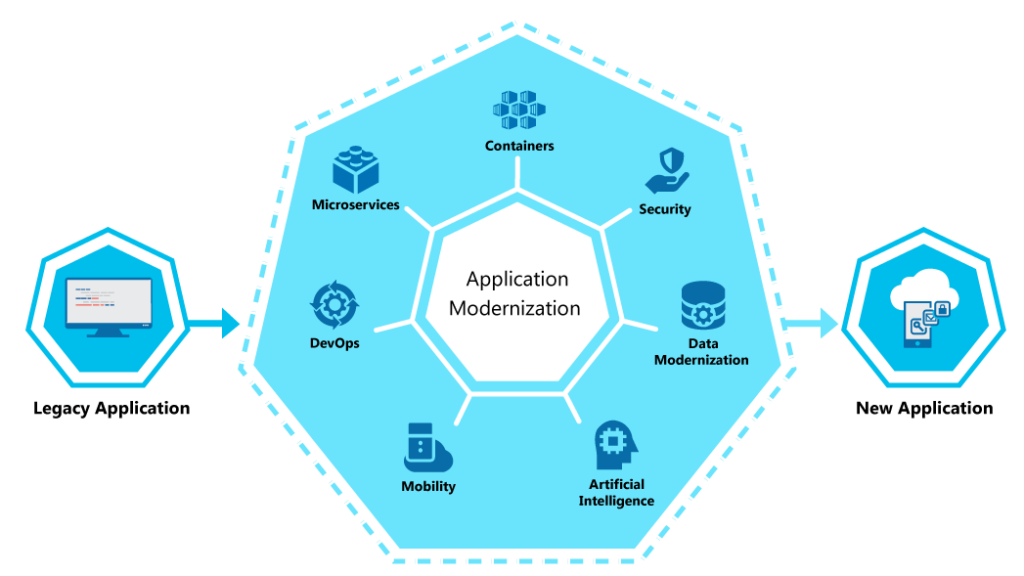

Legacy application is a software program that is outdated. Most enterprises use legacy applications and systems that continue to serve critical business needs. We were working in legacy app from 10 to 12 years. Typically, we faced the challenge is to keep the legacy application running, while converting it to newer, more efficient code that makes use of current technology and programming languages.

Although a legacy app still works, it may be unstable because of compatibility issues with current operating systems, browsers and information technology (IT) infrastructures. So we decided and started to change our legacy application to cloud native environments.

Cloud native is an approach for building applications as micro-services and running them on a containerized and dynamically orchestrated platforms that fully exploits the advantages of the cloud computing model. We achieve our applications in modern, dynamic such as public, private and hybrid clouds. Our main goal is to improve speed, reliability, scalability and, finally, margin in the market.

The AWS Cloud includes many design patterns and architectural options that we can apply to a wide variety of use cases. Some key design principles of the AWS Cloud include scalability, disposable resources, automation, loose coupling managed services instead of servers, and flexible data storage options.

Systems that are expected to grow over time need to be built on top of a scalable architecture. Such an architecture can support growth in users, traffic, or data size with no drop-in performance. It should provide that scale in a linear manner where adding extra resources results in at least a proportional increase in ability to serve additional load. Growth should introduce economies of scale, and cost should follow the same dimension that generates business value out of that system. While cloud computing provides virtually unlimited on-demand capacity, our design needs to be able to take advantage of those resources seamlessly.

There are generally two ways to scale an IT architecture: vertically and horizontally.

Scaling Vertically

Scaling vertically takes place through an increase in the specifications of an individual resource, such as upgrading a server with a larger hard drive or a faster CPU. This way of scaling can eventually reach a limit, and it is not always a cost-efficient or highly available approach.

Scaling Horizontally

Scaling horizontally takes place through an increase in the number of resources, such as adding more hard drives to a storage array or adding more servers to support an application.

Not all architectures are designed to distribute their workload to multiple resources, so let’s examine some possible scenarios.

A stateless application is an application that does not need knowledge of previous interactions and does not store session information. Each session is carried out as if it was the first time and responses are not dependent upon data from a previous session. For example, an application that, given the same input, provides the same response to any end user, is a stateless application. Those resources do not need to be aware of the presence of their peers — all that is required is a way to distribute the workload to them.

Inevitably, there will be layers of our architecture that you won’t turn into stateless components. By definition, databases are stateful. Many legacy applications were designed to run on a single server by relying on local compute resources. Other use cases might require client devices to maintain a connection to a specific server for prolonged periods. We used RDS for database service to manage our data through AWS.

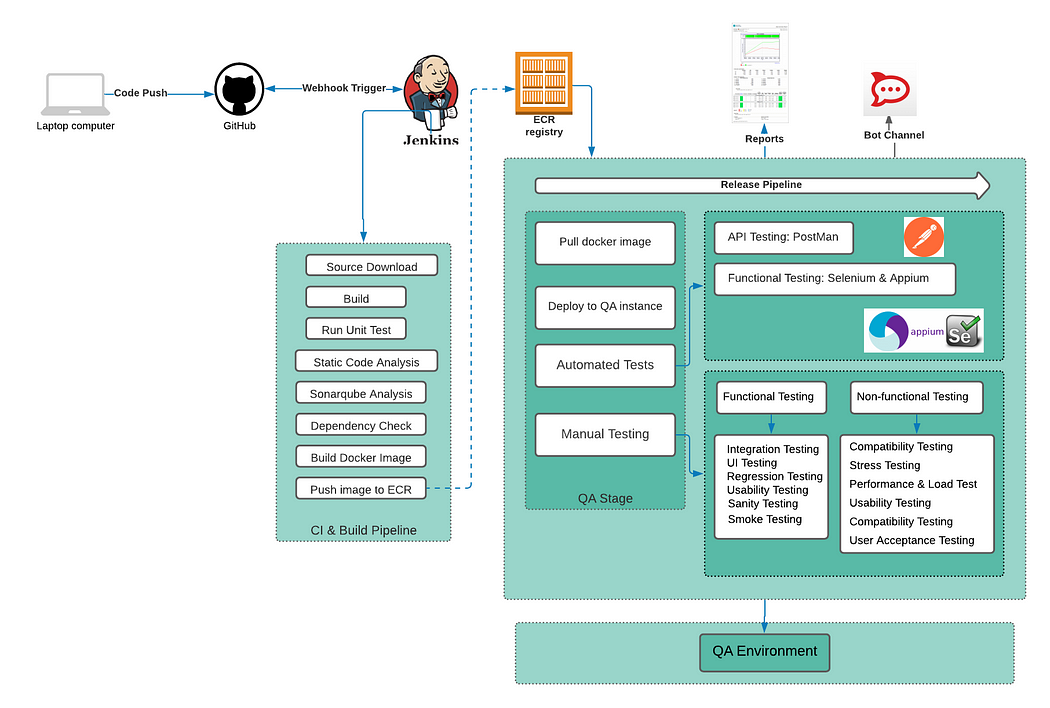

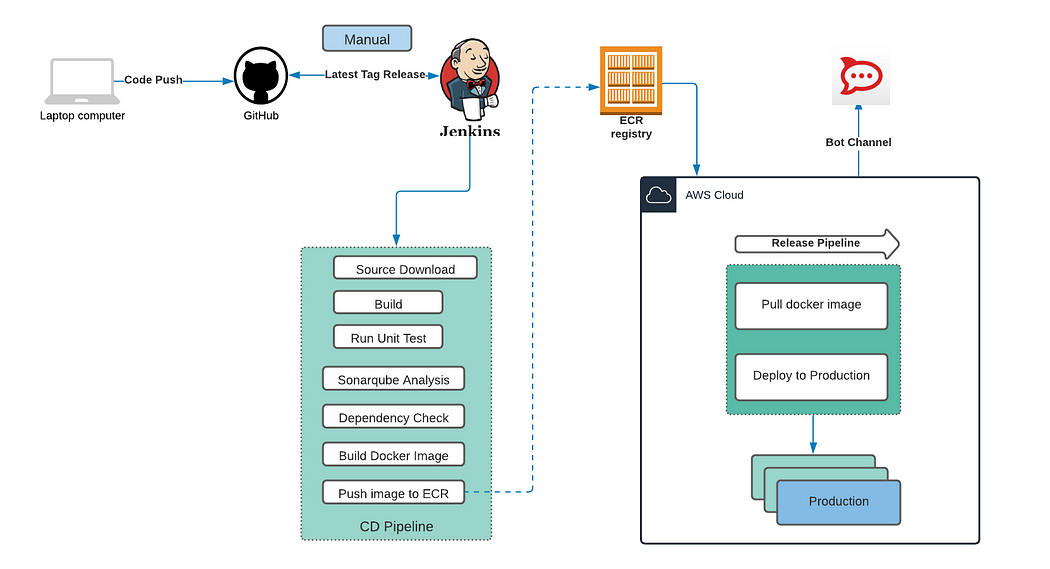

Agile and DevOps are two software development methodologies with similar goals; getting the end-product out as quickly and efficiently as possible. Both DevOps and Agile can work together since they can complement each other. DevOps promotes a fully automated continuous integration and deployment pipeline to enable frequent releases, while Agile provides the ability to rapidly adapt to the changing requirements and better collaboration between different smaller teams.

Continuous integration (CI) and continuous delivery (CD) is a set of operating principles that enable application development teams to deliver code changes more frequently, smaller and reliably. The technical goal of CI is to establish a consistent and automated way to build, package, and test applications. With consistency in the integration process in place, teams are more likely to commit code changes more frequently, which leads to better collaboration and software quality.

Continuous delivery picks up where continuous integration ends. CD automates the delivery of applications to selected infrastructure environments. It picks up the package built by CI, deploys into multiple environments like Dev, QA, Performance, Staging runs various tests like integration tests, performance tests etc and finally deploys into production. Continuous delivery normally has few manual steps in the pipeline whereas continuous deployment is a fully automated pipeline which automates the complete process from code check-in to production deployment.

In the containerization process, we package our software code with it’s dependencies and deployed in multi stage environments. In simpler terms, we encapsulates an application and its required environment. Then we stored our docker container image into AWS ECR (Elastic Container Registry) and deploy in EKS (Elastic Kubernetes Service) and different environments.

The process of managing and provisioning computer data centers through machine-readable definition files, rather than physical hardware configuration or interactive configuration tools is IaC. We are managing our infrastructure setup through Ansible and Terraform. We provisioned our new infrastructure with in a half an hour with all requirements meet.

Relational databases (also known as RDBS or SQL databases) normalize data into well-defined tabular structures known as tables, which consist of rows and columns. They provide a powerful query language, flexible indexing capabilities, strong integrity controls, and the ability to combine data from multiple tables in a fast and efficient manner. Amazon RDS makes it easy to set up, operate, and scale a relational database in the cloud with support for many familiar database engines. We are using AWS managed RDS service for our production servers.

As application complexity increases, a desirable attribute of an IT system is that it can be broken into smaller, loosely coupled components. This means that IT systems should be designed in a way that reduces inter-dependencies — a change or a failure in one component should not cascade to other components.

A way to reduce inter-dependencies in a system is to allow the various components to interact with each other only through specific, technology-agnostic interfaces, such as RESTful APIs. In that way, technical implementation detail is hidden so that teams can modify the underlying implementation without affecting other components. As long as those interfaces maintain backwards compatibility, deployments of difference

components are decoupled. This granular design pattern is commonly referred to as a micro-services architecture. We are trying to achieve this in near future.

Failure can happen anytime in any situation. As a wise person, we have to bounce back in our life and in our system also recover from failure without disrupting service for the users with in a minimal time. Resiliency is the ability of a system to recover from failures and continue to function.

High Availability

A groups of servers that support business-critical applications that require minimal downtime and continuous availability. We are using ELB (Elastic Load Balancer) for production running application with HA architecture and multi availability zones.

Disaster Recovery

Timely auto backup has been done in AWS for recovery in case of any natural or human-induced disaster.

When we design our architecture in the AWS Cloud, it is important to consider the important principles and design patterns available in AWS, including how to select the right database for our application, and how to architect applications that can scale horizontally and with high availability. Because each implementation is unique, we must evaluate how to apply this guidance to our implementation. The topic of cloud native architectures is broad and continuously evolving.

Sign up to stay updated with the latest insights, news, and more.